Jaesung Bae

jb82 [at] illinois [dot] edu

About

I am a PhD student in the Computer Science (CS)

Department at the University of Illinois Urbana-Champaign,

advised by Prof. Minje Kim and Prof. Paris Smaragdis. My current research

interests include speech and audio representation learning, as well as data augmentation methods

for low-resource and underrepresented domains in speech and audio.

In the summer of 2025, I had the privilege of working as a Research Scientist

Intern

on the Meta Superintelligence Lab. My project

focused on enhancing the

understanding

and

generation capabilities of full-duplex and multimodal (speech and text) large language models.

Previously I worked as a speech AI researcher at Samsung

Research, where my main research topics included personalized and zero-shot on-device

TTS systems. I am proud to have contributed to the TTS systems integrated in the Galaxy S24.

Before that, I was at NCSOFT, a game company,

where I primarily

studied expressive TTS and prosody controllable TTS systems.

I earned my MS in Electrical Engineering from KAIST,

where I was advised by Prof. Daeshik Kim in the BREIL lab, and my

BS in Electrical and Electronic Engineering

from Yonsei University.

Below shows my projects, publications, invited talks, and academic services. Please refere to my CV for further details.

News

- (Dec 2024) I am organizing the "ICASSP 2025 Generative Data Augmentation Challenge: Zero-Shot Speech Synthesis for Personalized Speech Enhancement." Looking forward to your participation! [link]

- (Aug 2024) Starting my PhD at the University of Illinois Urbana-Champaign (UIUC).

- (Dec 2023) Two papers have been accepted to ICASSP 2024! (one first author, one second author)

Publications

*: Equal Contribution

Generative Data Augmentation Challenge: Zero-Shot Speech Synthesis for Personalized Speech

Enhancement

Jae-Sung Bae, Anastasia Kuznetsova, Dinesh Manocha, John Hershey, Trausti

Kristjansson, and Minje Kim

In Proc. of the IEEE Int. Conf. on Acoustics, Speech, and Signal

Processing Workshops (ICASSPW): Generative Data Augmentation for Real-World Signal

Processing Applications (GenDA 2025), 2025.

[paper]

[code]

[website]

MELS-TTS : Multi-Emotion Multi-Lingual Multi-Speaker Text-to-Speech System via Disentangled

Style Tokens

Heejin Choi, Jae-Sung Bae, Joun Yeop Lee, Seongkyu Mun, Jihwan Lee, Hoon-Young

Cho,

Chanwoo Kim

In Proc. IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP),

2024.

End-to-End Speech Command Recognition with Capsule Network

Jae-Sung Bae, Dae-Shik Kim

In Proc. INTERSPEECH, 2018.

[paper]

Projects

You can click each project and check demos and further information.

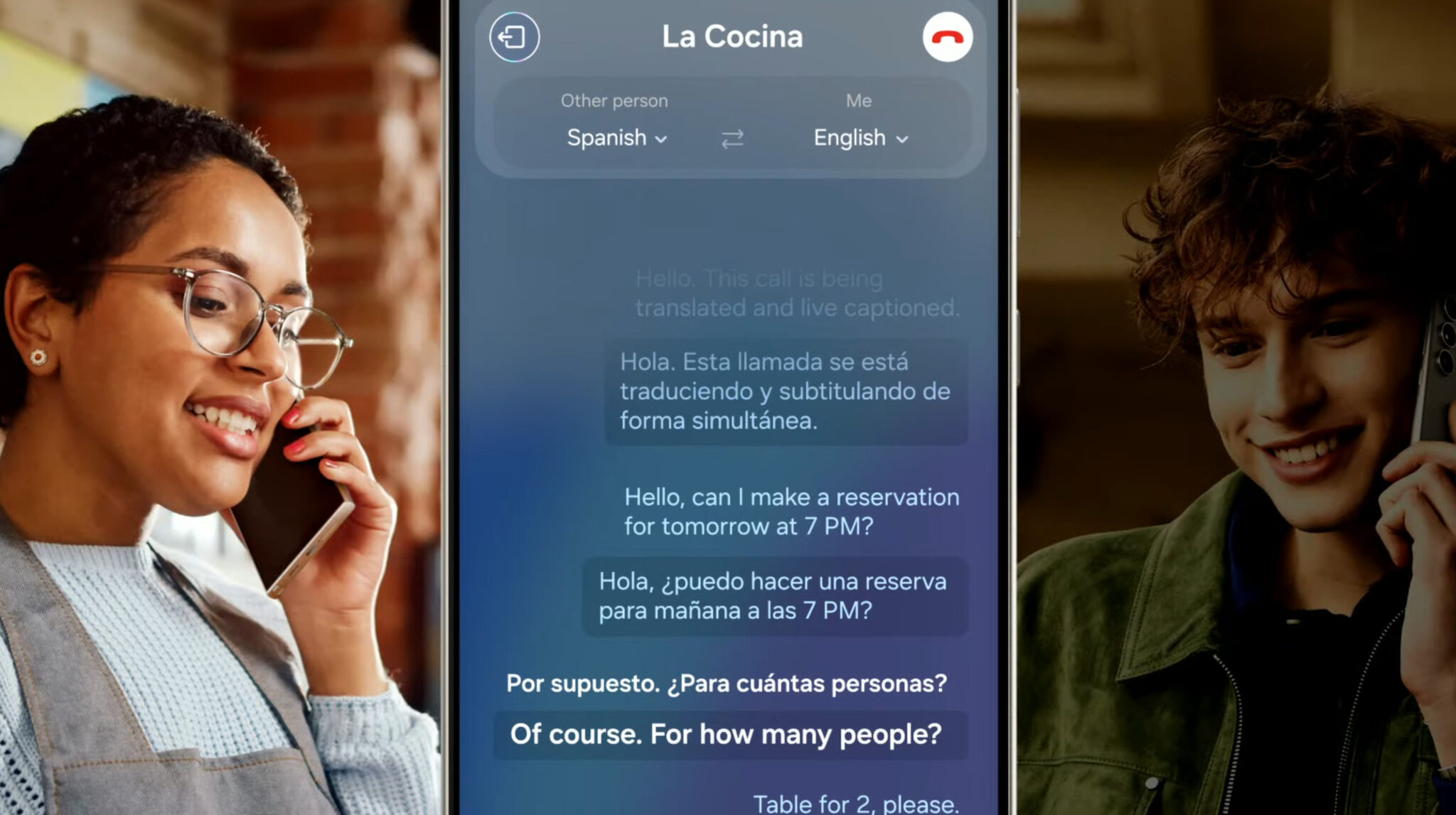

On-device TTS System in various languages for Galaxy S24's Live

Translation

Mar 2023 - Jan 2024 (@Samsung Research)

I contributed to the research and development of an on-device TTS system in eight different languages, which is included as a Live Translation feature and introduced as a main AI feature in the Galaxy S24. My contribution involved enhancing the model architecture and achieving a high-quality TTS system that supports various languages with a reduced model size.

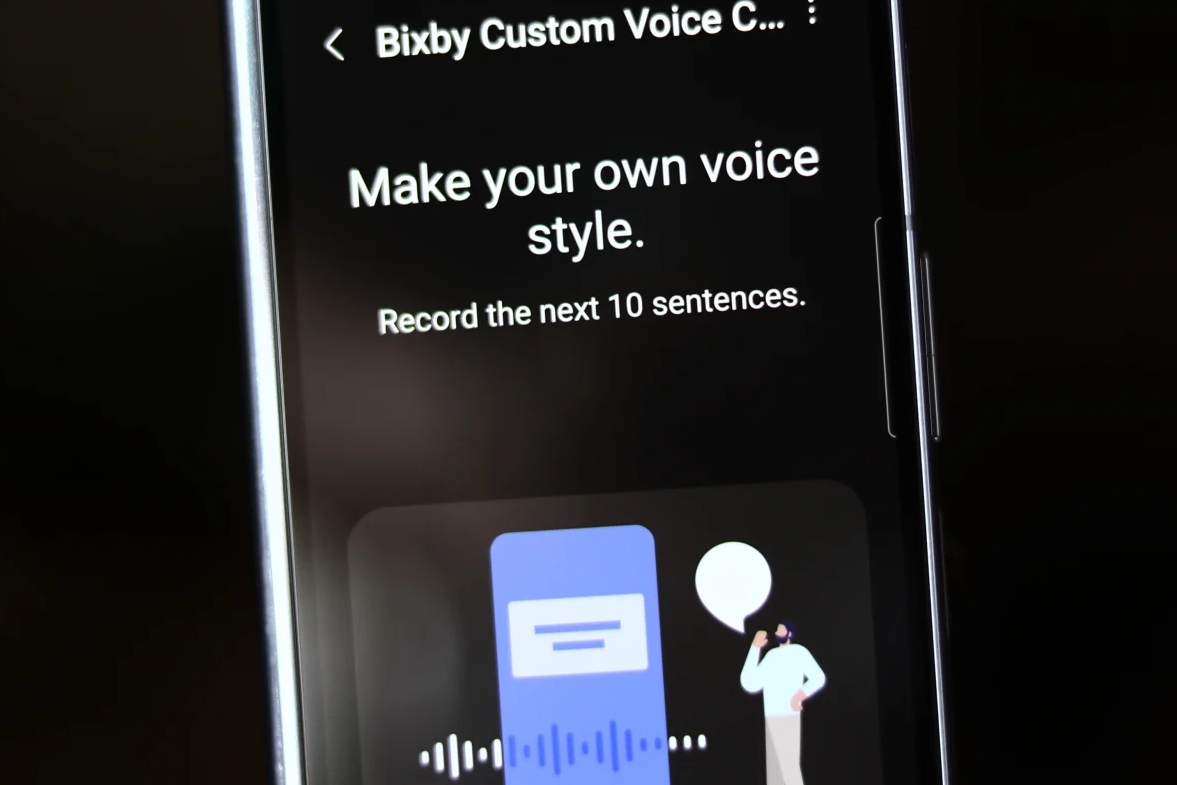

On-device Personalized TTS System for Bixby Custom Voice

Creation

May 2022 - Jan 2024 (@Samsung Research)

I contributed to the research and development of an on-device personalized TTS system, which was integrated into Samsung Galaxy Bixby's Custom Voice Creation and utilized within Bixby Text-call functionality. This system can create a personalized TTS system by fine-tuning the TTS directly on the user’s device with just 10 utterances.

Fine-grained Prosody Control of TTS System (prototype web

service)

Mar 2021 - Apr 2022 (@NCSOFT)

I conducted research and developed a TTS system that is capable of controlling the prosody of speech in a fine-grained level. With this system, users were able to modify the speech to have desired prosody. This system is released as an in-company web service and was widely used to make an guide videos of NCSOFT's game.

TTS System for K-pop Fandom Platform, “UNIVERSE” (live

service)

Mar 2019 - Apr 2022 (@NCSOFT)

I contributed to the research and development of a multi-speaker TTS system replicating the voices of numerous K-pop artists, approximately 100 in total, within a single TTS system. This TTS system was used in "UNIVERSE" service, which is a K-pop fan community platform.

TTS System in Baseball Broadcast Scenario

Mar 2019 - Mar 2021

(@NCSOFT)

I researched and developed an expressive TTS system that can generate speech with dynamic expressions suitable for diverse baseball situations. I published several demos on NCSOFT’s official blog and news articles. Kindly recommand to click this project, and see the demo videos.

Invited Talks

End-to-End Speech Command Recognition with Capsule

Network

NAVER Corp., Seong-Nam, Republic of Korea

Sep 2018

Academic Services

- Challenge Organizer on ICASSP 2025 Generative Data Augmentation for Real-World Signal Processing Applications (GenDA 2025) Workshop: Zero-Shot Speech Synthesis for Personalized Speech Enhancement [link]

- Reviewer: AAAI 2025