Jaesung Bae

bjs2279 [at] gmail [dot] com

About

I am presently working for Samsung Research as a speech AI researcher. My main research topic has recently been personalized and zero-shot on-device TTS systems. Previously, I worked at NCSOFT, a game company, mainly studying expressive TTS and prosody controllable TTS systems. My academic background includes a BS in Electrical and Electronic Engineering from Yonsei University and I earned my MS in Electrical Engineering from KAIST in BREIL lab, advised by Daeshik Kim. I am interested in speech synthesis and speech representation learing of prosody and spaeker identity. Currently, I am widening my interest to combining speech synthesis with techniques from various fields, such as spontaneous speech-to-speech, multimodal generation, video dubbing, etc.

Below shows my projects, publications, and invited talks. Please refere to my CV for further details.

News

- (12/13/2023) Two papers have been accepted to ICASSP 2024! (one first author, one second author)

Projects

You can click each project and check demos and further information.

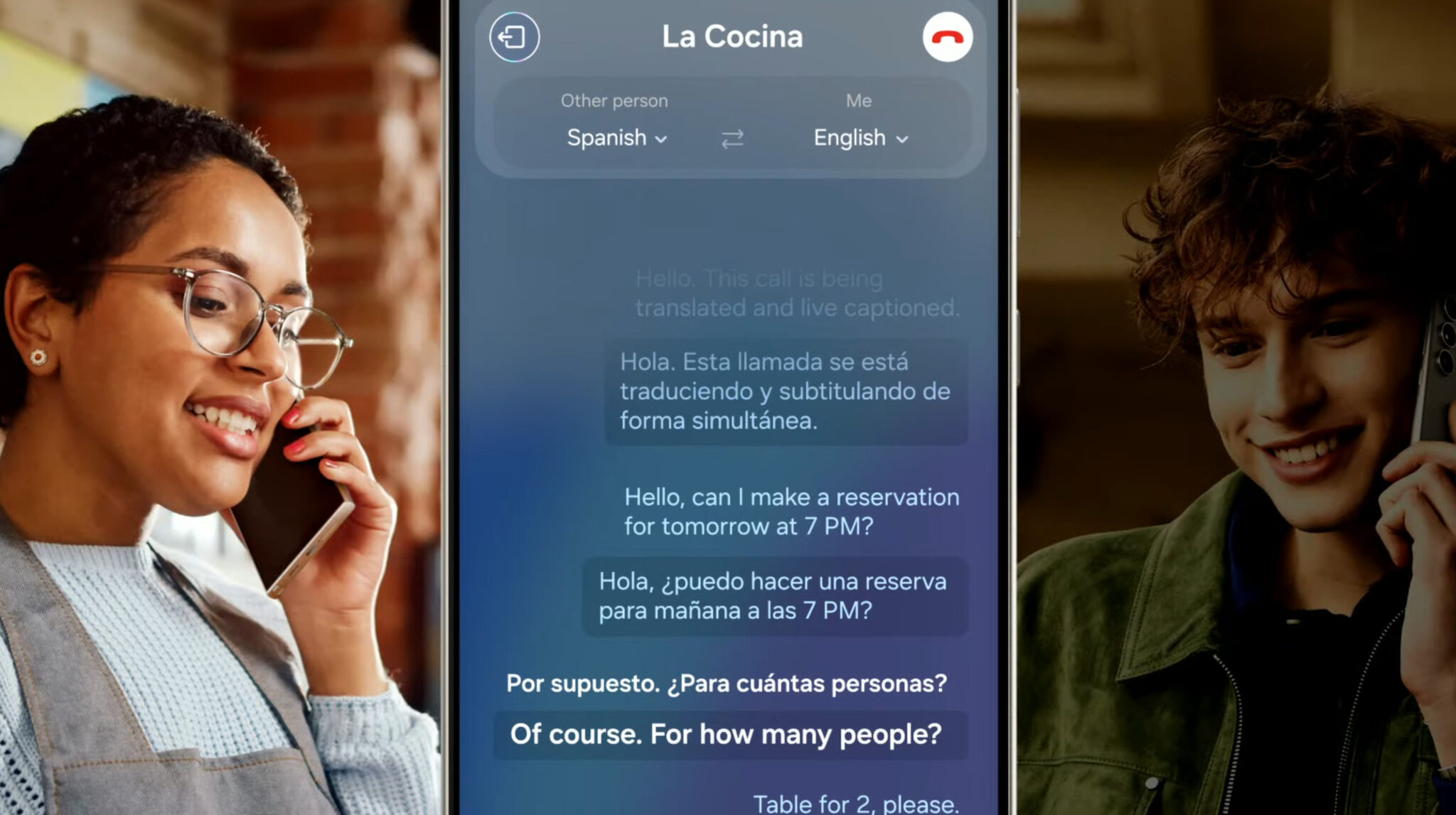

On-device TTS System in various languages for Galaxy S24's Live Translation

Mar 2023 - Jan 2024 (@Samsung Research)

I contributed to the research and development of an on-device TTS system in eight different languages, which is included as a Live Translation feature and introduced as a main AI feature in the Galaxy S24. My contribution involved enhancing the model architecture and achieving a high-quality TTS system that supports various languages with a reduced model size.

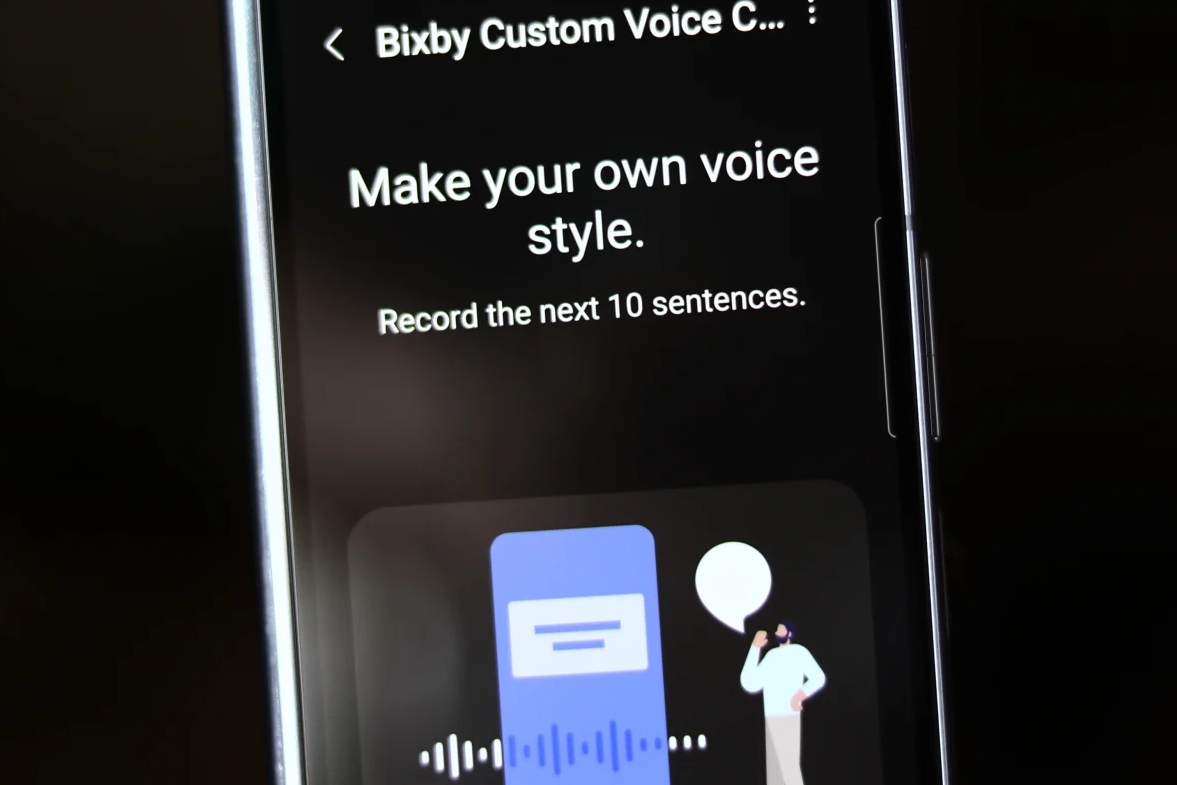

On-device Personalized TTS System for Bixby Custom Voice Creation

May 2022 - Jan 2024 (@Samsung Research)

I contributed to the research and development of an on-device personalized TTS system, which was integrated into Samsung Galaxy Bixby's Custom Voice Creation and utilized within Bixby Text-call functionality. This system can create a personalized TTS system by fine-tuning the TTS directly on the user’s device with just 10 utterances.

Fine-grained Prosody Control of TTS System (prototype web service)

Mar 2021 - Apr 2022 (@NCSOFT)

I conducted research and developed a TTS system that is capable of controlling the prosody of speech in a fine-grained level. With this system, users were able to modify the speech to have desired prosody. This system is released as an in-company web service and was widely used to make an guide videos of NCSOFT's game.

TTS System for K-pop Fandom Platform, “UNIVERSE” (live service)

Mar 2019 - Apr 2022 (@NCSOFT)

I contributed to the research and development of a multi-speaker TTS system replicating the voices of numerous K-pop artists, approximately 100 in total, within a single TTS system. This TTS system was used in "UNIVERSE" service, which is a K-pop fan community platform.

TTS System in Baseball Broadcast Scenario

Mar 2019 - Mar 2021 (@NCSOFT)

I researched and developed an expressive TTS system that can generate speech with dynamic expressions suitable for diverse baseball situations. I published several demos on NCSOFT’s official blog and news articles. Kindly recommand to click this project, and see the demo videos.